| Table of Contents |

|---|

Troubleshooting

...

Logsene Alerts

What are Logsene Alerts

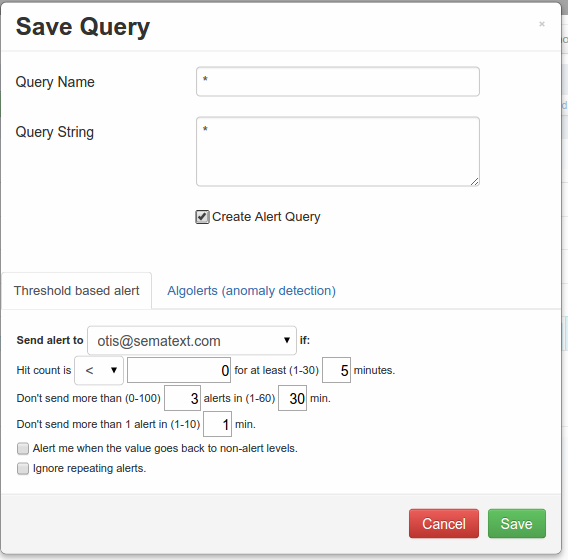

A: Logsene alerts are based on saved searches that trigger alert notifications when these saved searches meet a certain threshold condition in your logs - e.g. less than N matches in 5 minutes, more than M matches in 3 minutes, etc., or when such saved searches detect sudden changes in the number/volume of matching logs - i.e., anomalies.

How to create Logsene Alerts

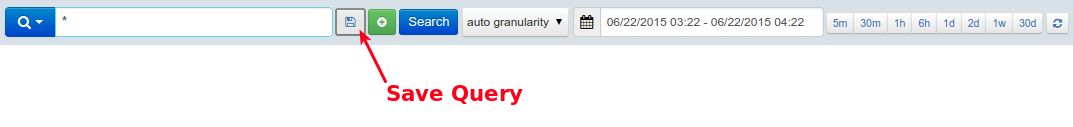

A: Logsene Alerts are added by clicking the disk icon on the right side of the search box. Please see screenshots below.

How to view Logsene Alerts

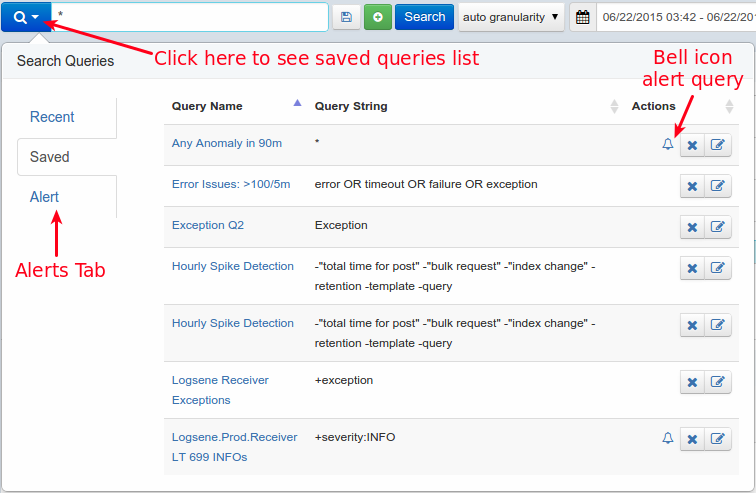

A: All saved queries are listed in "Search Queries" modal because all Alert Queries are also Saved Queries. To view this modal just click a magnifying glass icon left of the search field. Alert Queries are marked with bell icon on "Saved" tab. If you want to see only Alert Queries choose the "Alert" tab.

What is the difference between threshold-based Alerts and Anomaly Detection (aka Algolerts)

A: If you have a clear idea about how many logs should be matching a given Alert Query, then simply use threshold-based Alerts. In other words, if you know that you always have some ERROR-level log events, you may want to use threshold-based alerts for that. For example, if your logs typically have < 100 ERROR-level messages per minute you may want to create an Alert Query that matches ERROR log events and notifies you when there are more than 100 such matches in 1 minute.

If you do not have a sense of how many matches a given Alert Query matches on a regular bases, but you want to watch out for sudden changes in volume, whether dips or spikes, use Algolerts (Anomaly Detection-based Alerts). An extreme example that you may actually like is an Alert Query that matches all logs - "*" - a single wildcard character for a query. If you use that for an Algolert then Logsene will notify you when the overall volume of your logs suddenly changes, which may be a signal that you should look for what suddenly increased logging (e.g. maybe something started logging lots of errors, exceptions, timeouts, or some such), or why logging volume suddenly dropped (e.g. maybe some of your server(s) or app(s) stopped working and sending logs).

Can I send Alerts to HipChat, Slack, Nagios, or other WebHooks

S3 Archiving

How to obtain credentials from AWS

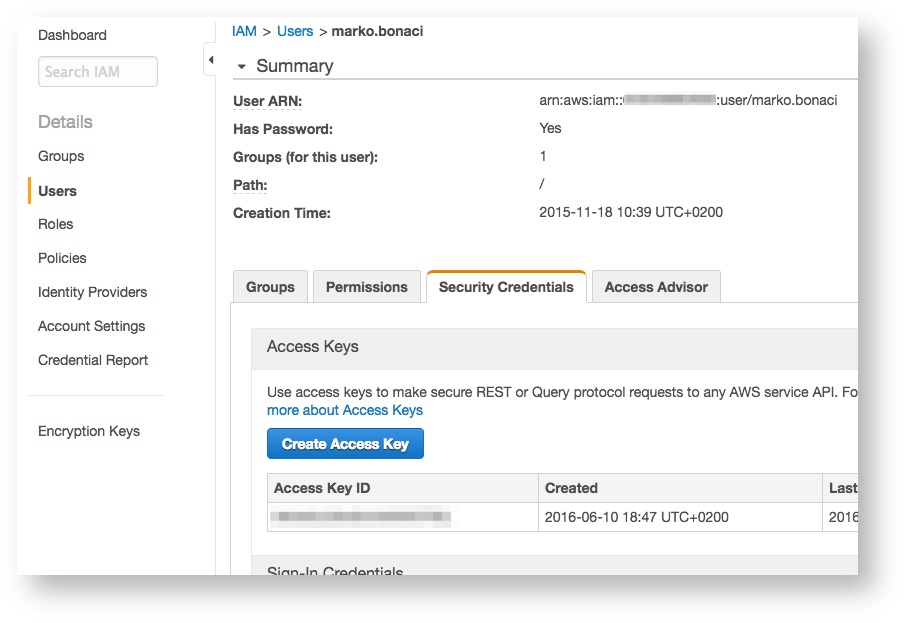

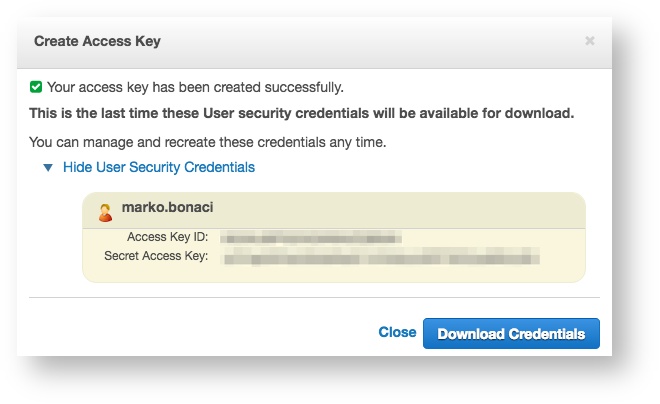

A: For Logsene AWS S3 Settings, besides S3 bucket name, you'll need Access Key ID and Secret Access Key.

Log in to your AWS account, go to IAM > Users and open (or create) a user that you want to use for S3 uploads:

Click on Create Access Key:

Note down Access Key ID and Secret Access Key (you can Download Credentials to a safe place if you like, but it's not necessary).

How to setup S3 archiving for your Logsene app:

A: In Sematext web app, go to Integrations > Apps view and choose Configure S3 using row context menu (three-dots icon) of the app whose logs you want to ship to S3:

Paste Access key ID and Secret access key to the corresponding fields.

Enter Bucket name (just the simple name, not fully qualified ARN) and choose Compression (read on for more details about compression) and confirm with Verify and save.

At this point, Logsene is going to check whether the information is valid using the AWS S3 API.

After the check is done you'll see a feedback message confirming information validity or an error message.

Which credentials are required when using AWS S3 Bucket Access Policy

A: In order to verify access to your S3 bucket, Logsene will first use the credentials to log in and, if successful, it will proceed to create a dummy object inside the bucket.

If object creation was successful it will delete the object.

For those reasons, the following credentials must be given to the bucket when saving AWS S3 settings:

s3:GetObjects3:PutObjects3:DeleteObject

After the verification is done you can remove s3:DeleteObject permission from the bucket policy.

How are logs compressed in S3

A: You have the option of choosing between two modern, lossless compression codecs from the LZ77 family, with excellent speed/compression ratio, LZ4 and LZF.

If you choose No compression option, logs will be stored in raw, uncompressed format, as JSON files.

How can I decompress logs archived in S3

A: You can decompress by installing these command line programs (then use man lz4 or man lzf for further instructions):

Ubuntu/Debian:sudo apt-get install liblz4-toolsudo apt-get install libcompress-lzf-java (landed in Ubuntu 15.04)

OSX:brew install lz4brew install liblzf

Which folder structure Logsene uses when uploading logs to S3

A: Inside the a bucket that you specify in settings, the following folder hierarchy is created: /<tokenMD5HexHash>/logsene_<date>/<hour>

For example: 856f4f9c3c084da08ec7ea9ad5d4cadf/logsene_2016-07-20/18

![]() Note:

Note:

From May 01, 2017 the folder hierarchy is:

sematext_[

sematext_[app-token-start]/[year]/[month]/[day]/[hour]

Where Where [app-token-start] is the first sequence of app's token.

E.g. for app with token token f333a7d7-ab55-4ce9-94a5-cdb44a704740, folder will have the following path on on May 01, 2017 at 11:20PM UTC:

sematext_f333a7d7/2017/05/01/23/

![]() Note:

Note:

Before May 01, 2017 the folder hierarchy was more flat:

/<tokenMD5HexHash>/logsene_<date>/<hour>

For example: 856f4f9c3c084da08ec7ea9ad5d4cadf/logsene_2016-07-20/18